Outdoor robots cannot run blindly. They operate across unpredictable terrains, in changing weather, and under continuous workloads. To navigate these conditions, they must process visual information with precision and reliability, which begins with integrating compute and vision systems that work together seamlessly. But that integration is rarely straightforward. Engineers often face mismatched interfaces, unstable links, and firmware conflicts that delay development and complicate field testing.

Star Robotics's Watchbot, an autonomous robot with a ZED X camera and D315 board, performs 24/7 surveillance and hazard detection. Credit: Star Robotics.

AVerMedia, a global leader in AI-driven edge computing solutions, and Stereolabs, a pioneer in 3D vision technology and AI-powered perception, have partnered to address this head-on. By combining a robust edge compute platform with a high-performance 3D vision system, they offer a pre-validated, deployment-ready solution. Hardware, firmware, and software are aligned out of the box, and integration becomes a starting point. This allows robotics teams to shift their focus toward application logic and field reliability, rather than debugging cables and drivers.

A Partnership Built to Accelerate Robotics Deployment

With integration already solved at the system level, the joint platform from AVerMedia and Stereolabs is designed for the real-world challenges of outdoor robotics. Hardware and software are co-developed and validated as a system, giving robotics teams a reliable starting point without the usual integration hurdles so they can build with confidence.

This partnership is also rooted in long-term support. Both companies co-develop reference materials, align on software roadmaps, and share technical documentation to support deployment. Customers benefit from consistent Board Support Package (BSP) updates and integration resources that shorten development cycles and reduce engineering overhead.

Cécile Schmollgruber, CEO of Stereolabs, described the value of this partnership clearly: "This collaboration with AVerMedia demonstrates the impact of physical AI in robotics. Combining our precise vision systems with AVerMedia's reliable edge compute platforms enables robots to understand and interact with complex environments in real time."

Together, AVerMedia and Stereolabs deliver a dependable platform that shifts the engineering team's focus from integration tasks to application design and field validation.

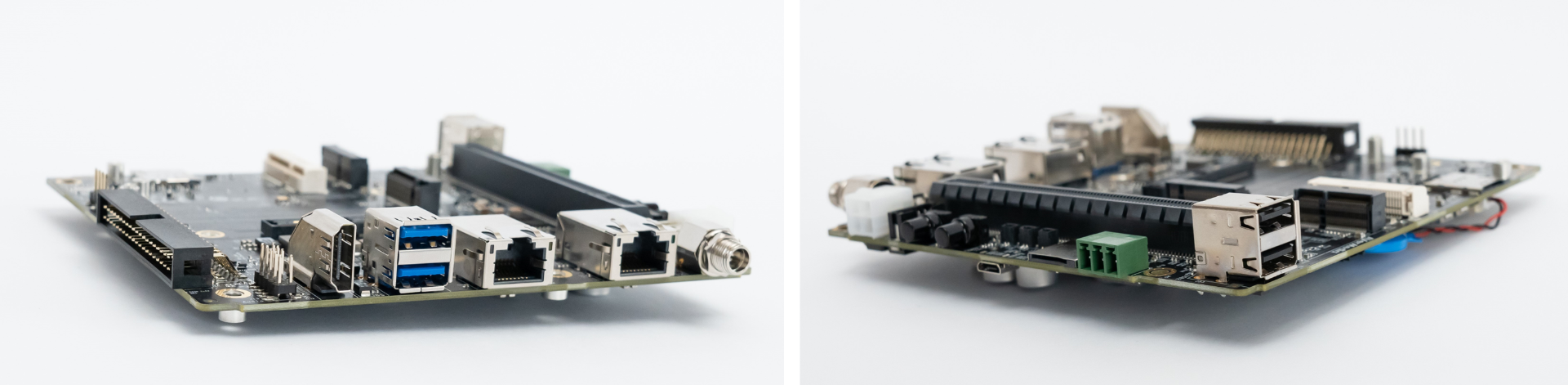

(Left) The D315 board from AVerMedia. Credit: AVerMedia. (Right) The Zed X One Camera from Stereolabs. Credit: Stereolabs.

Under the Hood: The Validated Tech Stack

Deploying robotics in the real world demands a tech stack that simply works, even under challenging conditions. AVerMedia and Stereolabs have engineered a deployment-ready platform that simplifies vision integration while delivering robust performance for advanced AI workloads.

At the foundation of this solution are three core products:

- AVerMedia D315 carrier board: a production-ready edge computer for NVIDIA® Jetson AGX Orin™ module, offering multi-camera GMSL2 support, built-in TPM security, 10 GbE networking, and out-of-band power control for robust field operations

- Stereolabs ZED X Series cameras: An industrial-grade vision system based on multiple GMSL stereo cameras that fuse RGB, depth, and inertial data to deliver centimeter-level 3D perception in real time

- Stereolabs ZED SDK: A modular software kit that runs on the D315 and exposes depth sensing, localization, mapping, and object detection APIs. Built-in AI models handle perception tasks across logistics, agriculture, and off-road applications, allowing developers to transition from prototype to deployment without rewriting core vision functions.

Together, these three components create a plug-and-play stack that enables engineers to focus on application logic rather than low-level integration.

Engineering for Reliability in the Field

The engineering teams co-designed firmware, power sequencing, and BSPs to ensure every interface behaves predictably. During validation, they discovered an I²C address overlap between the camera and the audio codec. They resolved this by adjusting the power-on timing and then ran cold- and warm-boot cycles to confirm stability. This process produced a single software image that recognized each camera the moment it was connected, eliminating the need for manual driver work.

To ensure readiness beyond the lab, the joint roadmap covers thermal validation, EMI testing, and mechanical fit checks to ensure the system performs reliably in outdoor enclosures and mission-critical environments. Both companies maintain a shared issue-tracking system, so customers receive coordinated fixes and updates. Reference designs, pinout diagrams, and ROS 2 launch files are published in a single developer portal, streamlining evaluation.

Streamlining Robotics Integration

The D315 platform has already been adopted in use cases such as traffic monitoring, autonomous security patrols, and smart retail analytics. Feedback from these pilots drove improvements to cable strain relief, connector sealing, and on-board voltage regulation. The production release now ships with environmental ratings that match typical robot IP enclosures. These deployments show this solution's capability to support real-world AI applications across various environments.

Paired with the ZED SDK, the platform offers a full software stack for spatial understanding. The SDK provides depth sensing, object detection, body tracking, and mapping, all powered by TERRA AI. It fuses vision, inertial, and optional GNSS data to deliver real-time 3D insights, even in harsh conditions such as low light or rain. Engineers can activate only the features they need, keeping the runtime efficient and focused on the application.

For AI and robotic teams, this platform means faster starts and fewer integration headaches. Engineers can begin system development with a pre-configured stack that removes early-stage hardware and software integration work. They simply mount the camera, seat the Jetson module, flash the image, and start building autonomy logic. Time previously spent on board preparation and interface debugging now shifts to perception tuning, navigation, and application testing activities that move a robot from prototype to revenue.

The AVerMedia D315 board showing its I/O interfaces. Credit: AVerMedia

From Lab to Field: Star Robotics and Autonomous Security

This platform has already proven itself in the field. One real-world application is Star Robotics' Watchbot, an autonomous security and inspection robot designed for continuous operation across industrial facilities, critical infrastructure, and commercial sites. It illustrates how the D315 + ZED X + ZED SDK performs in service. Watchbot conducts round-the-clock monitoring and inspection with the mission to automate routine patrols, detect safety hazards early, and reduce human exposure in high-risk areas.

Watchbot's perception suite centers on a Stereolabs ZED X stereo camera linked to an AVerMedia D315 carrier board that hosts the robot's Jetson compute. ROS2 orchestrates navigation and sensor fusion, while NVIDIA DeepStream streams live video for remote monitoring and AI inference.

Star Robotics' fleet of 60+ Watchbot units has logged over 65,000 hours and 28,000 km of autonomous patrols across industrial and critical sites. Source: Star Robotics.

The new setup routes power and data through a single automotive-grade GMSL2 cable, giving Watchbot a stable link between the D315 board and the ZED X camera. On that same board, the ZED SDK converts the stereo feed into depth maps, object tracks, and localisation data that the navigation stack can use immediately. Working alongside LiDAR and a 360° observation module with PTZ and thermal imaging, the Avermedia–Stereolabs combination now anchors the robot's sensing suite.

Since its first trials, Watchbot has grown into a fleet of over 60 autonomous units. Collectively, they have logged 28,000 kilometres of driver-free travel and over 65,000 hours of surveillance at factories, airports, and critical facilities. Customers rely on this system because the integrated D315, ZED X, and ZED SDK stack keeps each patrol on route and every camera stream online, day after day, even in rain, fog, and direct sunlight.

Designed to Support Robotics Teams

The AVerMedia and Stereolabs platform is built not only for performance but also for the pace and realities of modern robotics workflows. From evaluation to deployment, it gives engineers a stable foundation to build on without losing time to low-level system integration.

Plug-and-play compatibility between the ZED X camera, the D315 carrier board, and the ZED SDK removes one of the most time-consuming steps in robotics development. Engineers no longer need to source custom drivers, patch SDKs, or modify BSPs just to establish reliable communication between camera and compute. The system boots into a working configuration with pre-validated firmware and software compatibility already in place.

This integration support extends across the entire development lifecycle. Both companies maintain aligned software roadmaps, coordinated BSP release schedules, and a shared set of resources, including sample applications, reference diagrams, and ROS 2 launch files, not to mention a joint issue-tracking system for rapid fixes. Customers can also engage directly with the engineering teams to troubleshoot camera initialization, optimize firmware settings, or review power sequencing.

Post-deployment, teams continue to receive updates aligned with JetPack releases and camera firmware upgrades. This reduces the need for internal workarounds and ensures long-term maintainability in production systems. Whether building a first prototype or scaling into production, robotics teams benefit from fewer surprises, faster iteration, and more time focused on core autonomy logic. This stack scales smoothly from early prototypes to full production fleets, supporting multiple use cases like outdoor robots, mobile inspection units, and autonomous vehicles.

Showcased at GTC Paris and Beyond

The AVerMedia–Stereolabs collaboration was on full display at NVIDIA GTC Paris 2025, where the companies unveiled their integrated multi-camera AI vision platform for robotics as part of the broader Jetson ecosystem. Designed for both autonomous mobile robots (AMRs) and humanoid systems, the platform demonstrated how synchronized 3D perception and compute can be delivered in a compact, rugged form factor.

It showcased real-time multi-camera streaming and depth perception running on NVIDIA Jetson, based on real-world deployments, handling AI workloads for navigation, object detection, and safety, confirming its field readiness.

AVerMedia and Stereolabs at the NVIDIA GTC 2025 event. Credit: AVerMedia

The event brought together AI developers, robotics engineers, and ecosystem partners seeking tools to bridge the gap between prototyping and deployment. The D315 + ZED X + ZED SDK platform answered that call, showing that vision integration no longer needs to be an obstacle.

The GTC showcase also positioned the solution as commercial-ready for robotics OEMs. With validated BSPs, robust system integration, and out-of-the-box compatibility, it is now part of the broader NVIDIA Jetson ecosystem roadmap. Support for next-generation Jetson Thor modules, expanded camera configurations, and deeper ROS 2 integration is already underway, driven by feedback from early deployments.

For robotics developers and manufacturers, the presence at GTC confirmed the maturity of the platform and the depth of engineering behind it. The collaboration continues to show up where it matters: in the tools and environments that robotics teams depend on to move from prototype to production.

CONCLUSION

Integrating advanced vision and compute capabilities remains one of the most resource-intensive engineering hurdles for robotics teams building field-ready systems. AVerMedia and Stereolabs remove that barrier with a pre-validated platform and coordinated support model designed to accelerate development without compromising performance.

Robotics teams can prototype faster, scale confidently, and spend more time advancing autonomy instead of resolving interface issues. Whether powering a prototype or supporting a deployed fleet, the D315 + ZED X + ZED SDK stack delivers the reliability and flexibility required for real-world robotics.

To learn more, please contact AVerMedia at Service.AverAI@avermedia.com or contact Stereolabs at support@stereolabs.com.